Member-only story

An Introduction to Markov Decision Process

The memoryless Markov Decision Process predicts the next state based only on the current state and not the previous one.

Google’s PageRank developed by Sergey Brin and Larry Page is based on a Markov Decision Process(MDP) utlizing the Markov chains making it the most used applications of a MDP.

What is MDP?

Markov Decision Process(MDP) is a mathematical framework for sequential decision and a dynamic optimization method in a stochastic discrete control process.

Markovian property is a memoryless property of a stochastic process where the future is independent of the past and is only based on the current state, as proposed by Andrei Markov.

Components of MDP

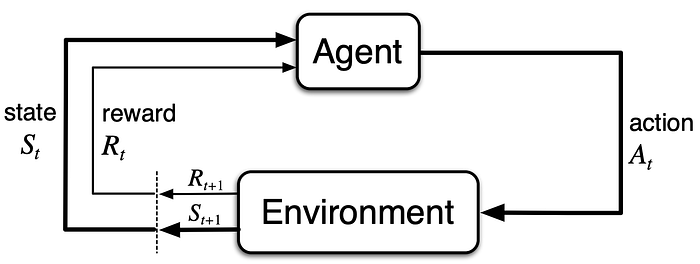

The learner or the decision maker, called the Agent, interacts continually with its Environment by performing actions sequentially at each discrete time step. Interaction of the Agent with its Environment changes the Environment's state, and as a result, the Agent receives a numerical reward from the Environment.

The MDP and Agent generate a sequence or trajectories of state, action, and reward over a period of time. At the beginning of the trajectory, the Agent is in state S₀ and continues till it reaches the final state trajectory.

A probability distribution, P(s`| s, a) represents the probability of passing from one state(s) to another(s`) when taking action a. The transition probability T(s, a, s`) specifies the probability of ending up in state "s`" when taking action "a" in the state "s"

What is the objective of MDP?

The objective of the MDP is to maximize the expected return.

The goal of the MDP process is for the Agent to maximize the total long-term reward over time from its Environment by choosing the right action for a specific state. MDP focuses on maximizing not immediate but…